Data center microsegmentation can provide enhanced security for east-west traffic within the data center.

Data centers historically have been protected by perimeter security technologies. These technologies include

firewalls, intrusion detection and prevention platforms, and custom devices, all designed to aggressively analyze

incoming traffic and help ensure that only authorized users can access data center resources. These services

interdict and analyze north-south traffic: that is, traffic into and out of the data center. These services can be very

effective at the perimeter, but they generally have not been provisioned to analyze device-to-device traffic within

the data center, commonly referred to as east-west traffic.

Historical Data Center Security Protection:

Modern application design, with the popularity of the N-tier web, application, and database application model, has

vastly expanded the ratio of east-west traffic to north-south traffic. By some estimates, data centers may have five

times as much east-west traffic as north-south traffic as dozens or hundreds of web, application, and database

servers communicate to deliver services.

Classic data center designs assume that all east-west traffic occurs in a well-protected trust zone. Any device

inside the data center is generally authorized to communicate with any other device in the data center. Because all

data center devices exist inside a hardened security perimeter, they should all be safe from outside incursion.

Recent data breaches, however, have shown that such an assumption is not always valid. Whether through

advanced social engineering or compromised third-party attacks, determined malefactors have shown the ability to

penetrate one data center device and use that as a platform to launch further attacks inside the data center.

Microsegmentation and the New Data Center:

Microsegmentation divides the data center into smaller, more-protected zones. Instead of a single, hardened

perimeter defense with free traffic flow inside the perimeter, a microsegmented data center has security services

provisioned at the perimeter, between application tiers, and even between devices within tiers. The theory is that

even if one device is compromised, the breach will be contained to a smaller fault domain.

As a security design, microsegmentation makes sense. However, if such a data center relies on traditional firewall

rules and manually maintained access control lists, it will quickly become unmanageable. An organization cannot

simply create a list of prohibited traffic between nodes; that list will grow exponentially as attack vectors,

vulnerabilities, and servers multiply.

The only workable solution is a trusted declarative (whitelist) security model in which security services specify the

traffic that is permitted and everything else is denied. This solution requires tight integration between application

and network services, and that integration is lacking in most data center deployments. Indeed, network analysis

frequently demonstrates that applications are using unexpected traffic flows to deliver services, demonstrating the

traditional disconnection between application, server, and network managers.

Application-Centric Networking:

Applications provide critical access to company data and reporting metrics associated with business outcomes. Essentially, delivery of secure applications is the most important task of the data center, so it necessarily follows that networking and infrastructure must be application-centric. The terms and constructs of applications must determine the flow, permissions, and services that the network delivers. The application profile must be able to tell the network how to deliver the required services.

This approach is what is known as a declarative model, which is an instantiation of promise theory. Devices are told what services to provision, and they promise to do it or to tell the control system what they can do. The application profile specifies the policy that must be provisioned. The network administrator declares that intended policy to the network devices, which then configure themselves to deliver the services (filtering, inspection, load balancing, quality of service [QoS], etc.) that are necessary for that application. This approach provides the best way to manage applications and network devices at scale.

The declarative model contrasts sharply with the traditional imperative model. In the imperative model, operators manually configure each network and Layer 4 through 7 device, specifying such parameters as VLANs, IP addresses, and TCP and User Datagram Protocol (UDP) ports. Even with scripting and third-party management tools, this process is a time-consuming and complicated endeavor. Moreover, configurations (such as firewall rules and access control lists [ACLs]) are often left unchanged as the network load changes, because altering a series of manually maintained ACLs can have unanticipated consequences. This approach leads to longer and longer firewall rule sets, longer and more complicated processing, and increased device load. As data centers have grown from tens to hundreds to thousands of devices, the imperative model is no longer sufficient.

With the declarative model, the responsibility for interpreting policy falls to the network, and the responsibility for programming devices falls to the devices themselves. This model has some important advantages over the imperative model:

● Scalability: Network management capability scales linearly with the network device count.

● Intelligence: The network maintains a logical view of all devices, endpoints, applications, and policy and intelligently specifies the subset of that view that each device needs to maintain.

● Holistic view of the data center: Services can truly be made available on demand and provisioned more efficiently, and unused services can be retired.

It’s About Policy:

An application-centric view of the infrastructure allows applications to request services from the network, and it provides the network intelligence to efficiently deliver those services. Security, whether macrosegmented or microsegmented, is just another service.

Security must be part of the overall data center policy. It must be considered and provisioned as an integral part of the services that the data center provides; it cannot stand alone. Security is usually provisioned as one of a series of services to be applied in a chain. Any modern data center must provide security and segmentation as one of the many services that are provisioned as dictated by the overall policy and compliance objectives.

Microsegmentation may require hundreds or thousands of security policy rules. It would be very difficult to provision all those rules manually. A declarative model enables the scale required, because the administrator only needs to pass the security and services policies to the network, which would then interprets and provisions the physical and virtual devices as needed. The administrator defines these policies within the logical model, which is an abstracted view of the network and everything connected to it. One important advantage of this logical model is that every device and service is an object, and logical objects can be created, replicated, modified, and applied as needed. One security and service policy can be applied a hundred times, or a hundred different, custom policies can be applied. This flexibility and exceptional level of control is inherent in the abstraction of the physical network into the logical model.

Requirements for the Modern Data Center

To deliver this policy effectively, the data center infrastructure must also support:

● Virtual servers, with flexible placement and workload mobility: Virtual machine managers and the network must communicate with each other, enabling rapid machine and service creation and virtual switch configuration for network policy.

● Physical servers, with flexible placement and mobility: Physical bare-metal servers are and will continue to be widely deployed and used. They must not be treated like less important elements by the data center infrastructure. They must have access to the same policies, services, and performance as virtual servers.

● Hypervisors from a number of vendors as well as open-source hypervisors: Most data centers do not rely on a single hypervisor, and an infrastructure that effectively supports only one hypervisor drastically restricts performance and service flexibility.

● Open northbound interfaces to orchestration platforms and development and operations (DevOps) tools, such as OpenStack and Puppet and Chef: Network automation goes well beyond virtual machine management, and the infrastructure needs to support a wide range of automation platforms.

● Open southbound protocols to a broad ecosystem of physical and virtual devices: Security and other services are provided by a number of vendors, and the modern data center infrastructure must support those devices in the same declarative manner that it supports routers and switches.

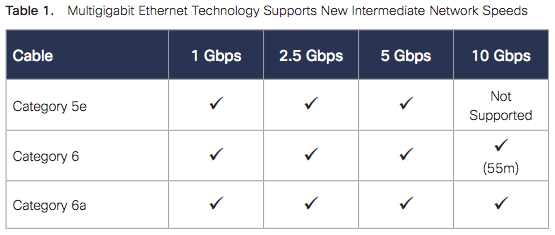

● Services at line rate, whether 1, 10, or 40 Gbps or beyond: Today’s data center traffic patterns rely on consistently low latency, high speed, and efficient fabric utilization, and any infrastructure that doesn’t provide all three should not be considered. All three characteristics must be available to all devices and services, without gateway devices or other latency-inducing extra hops.

● Tight logical-physical integration for provisioning and troubleshooting: The network control system cannot just tell the network devices how to configure themselves; there must be full, two-way communication. This tight integration provides a real-time view of network utilization, latency, and other parameters, enabling the control system to more effectively deploy needed services and troubleshoot any problems that may occur. Without this integration, provisioning is performed without any insight, and troubleshooting requires examining two distinct network structures: a virtual one and a physical one.

Cisco Application Centric Infrastructure

Cisco® Application Centric Infrastructure (ACI) provides true microsegmentation in an effective manner. Cisco ACI abstracts the network, devices, and services into a hierarchical, logical object model. In this model, administrators specify the services (firewalls, load balancers, etc.) that are applied, the kind of traffic they are applied to, and the traffic that is permitted. These services can be chained together and are presented to application developers as a single object with a simple input and output. Connection of application-tier objects and server objects creates an application network profile (ANP). When this ANP is applied to the network, the devices are told to configure themselves to support it. Tier objects can be groups of hundreds of servers, or just one; all are treated with the same policies in a single configuration step.

Cisco ACI provides this scalability and flexibility to both physical servers and virtual machines, and it provides virtual machine management to several market-leading hypervisors. If permitted by the network policy, vendor A’s virtual machine can speak freely to vendor B’s virtual machine as if they were in the same hypervisor.

Cisco ACI exposes a published northbound Representational State Transfer (REST) API through XML and JavaScript Object Notation (JSON), as well as a Python software development kit (SDK), allowing easy integration with popular tools such as Cisco UCS® Director, OpenStack, and Puppet and Chef. The system also provides an open source southbound API, which allows third-party network service vendors to implement policy control.

The Cisco ACI fabric is built on Cisco Nexus® 9000 Series switches, the fastest and greenest 40-Gbps switches on the market today. A Cisco ACI fabric is deployed in a spine-and-leaf architecture and supports advanced equal-cost multipath (ECMP) routing, enabling 40 percent greater network efficiency than conventional architecture. Every end device, whether physical or virtual, connects to a leaf port, and every device’s traffic is switched at line rate through the fabric. There are no gateway devices to add latency or interfere with policy application.

The fabric is abstracted by the logical model, not virtualized. Therefore, the network control systems have full visibility into the physical domain as well as the virtual domain. For example, Cisco ACI maintains a real-time measure of latency through every path in the network, which other networking solutions can’t do. The system maintains health scores for all devices, applications, and tenants, quickly flagging any degraded condition and programmatically reconfiguring the network as needed.

Conclusion

Microsegmentation provides internal control of traffic within the data center and can greatly enhance a data center’s security posture. Cisco ACI is the only solution available today that enables true microsegmentation with the performance, scalability, and visibility that modern applications demand.